blindhamster

Bronze Level Poster

Thanks for the help with rectifying my loss of overclocking Buzz  Will let you know how it goes tonight +rep

Will let you know how it goes tonight +rep

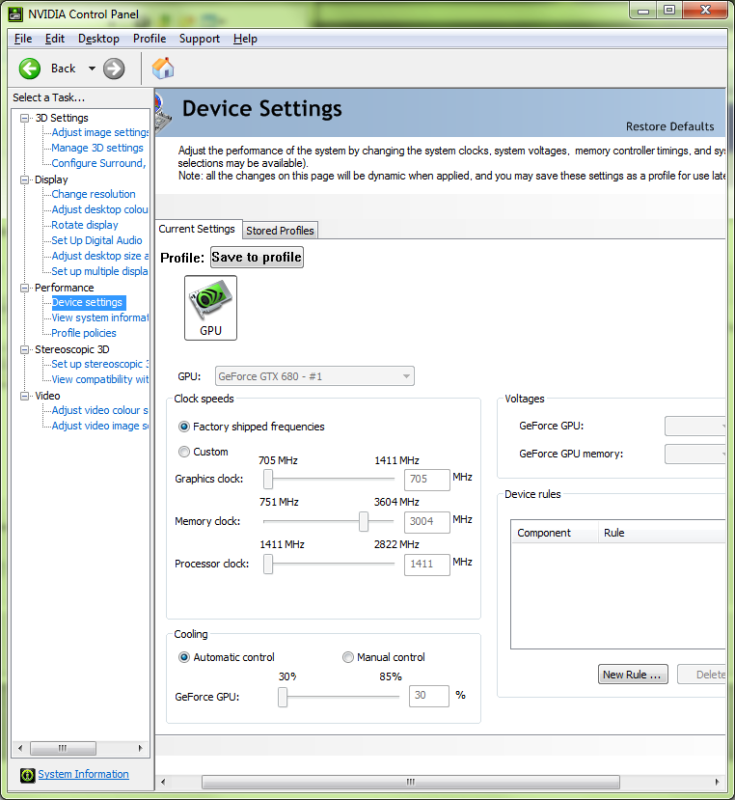

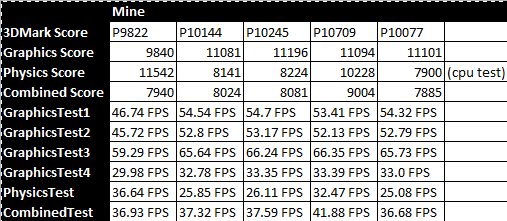

now i just need to know why my gpu isn't performing correctly...

now i just need to know why my gpu isn't performing correctly...