JUNI0R

VALUED CONTRIBUTOR

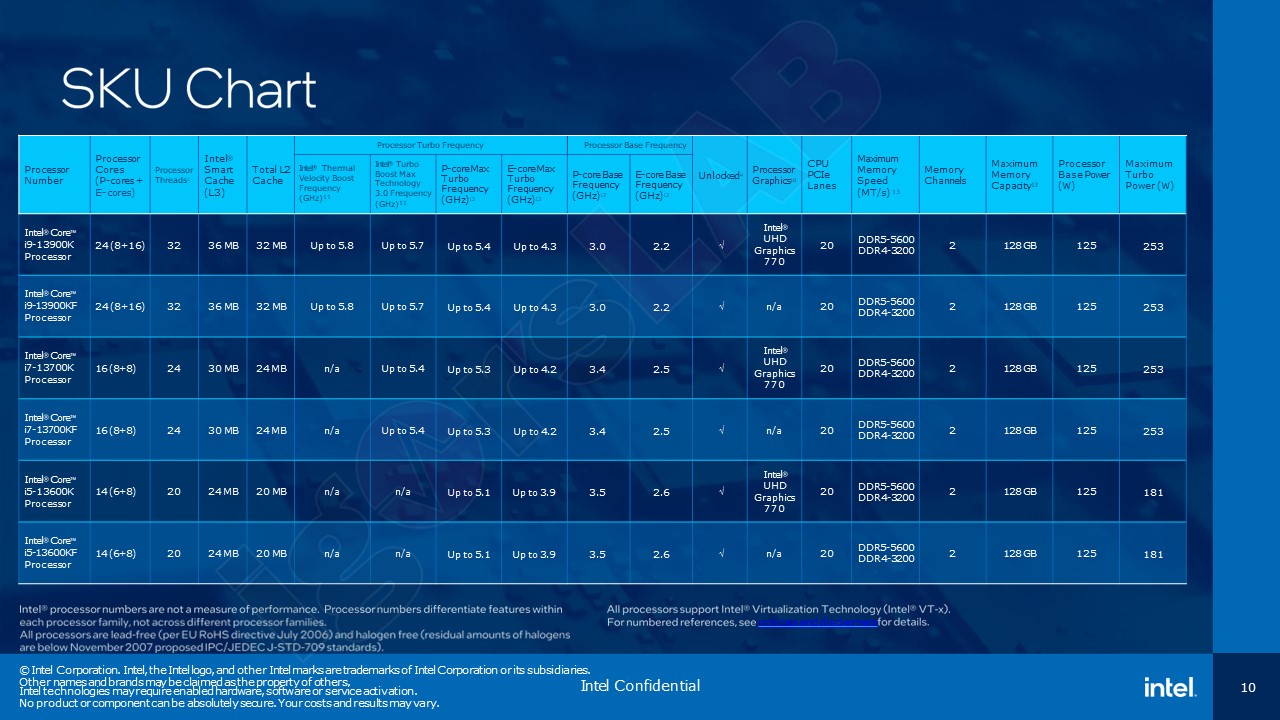

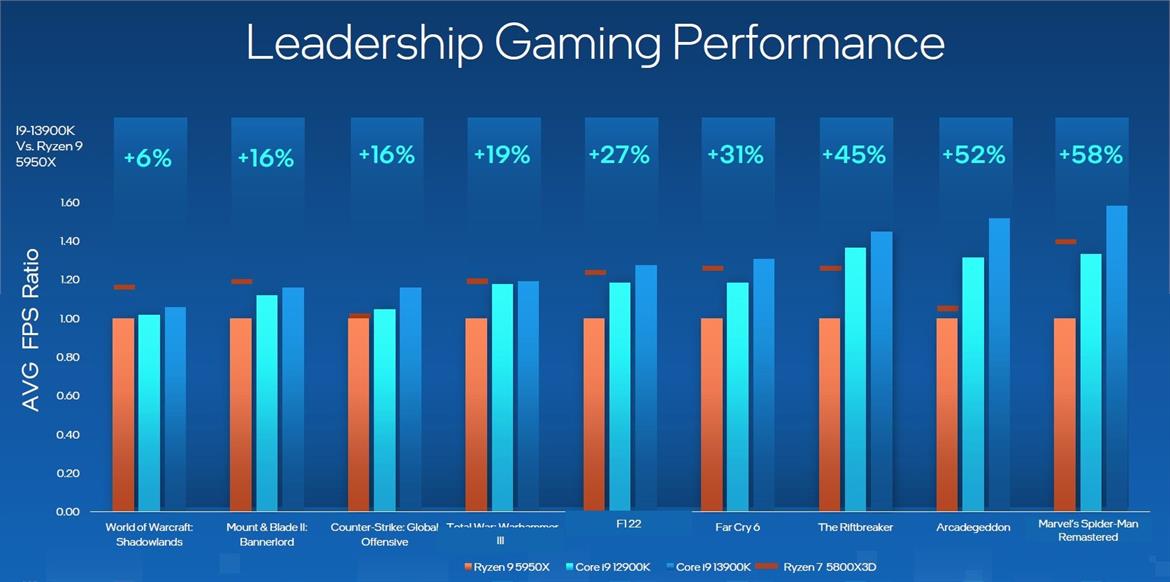

While everyone's been busy buying their AM5 CPU's, Intel has announced the 13th gen K SKU's so might be worth holding off on that buy button just for a while longer. Due to be released on the 20th of October, PCS already has them available for pre-order although as always I'd wait for reviews before going through with a purchase. This generation is more of an evolution rather than revolution but the increase to L3 cache and those extra E Cores could really tighten things up between Intel and AMD and the continued support of DDR4 will certainly help Intel while DDR5 is at a premium.

Below, are a few helpful resources about the launch. As always, take performance metrics with a pinch of salt!

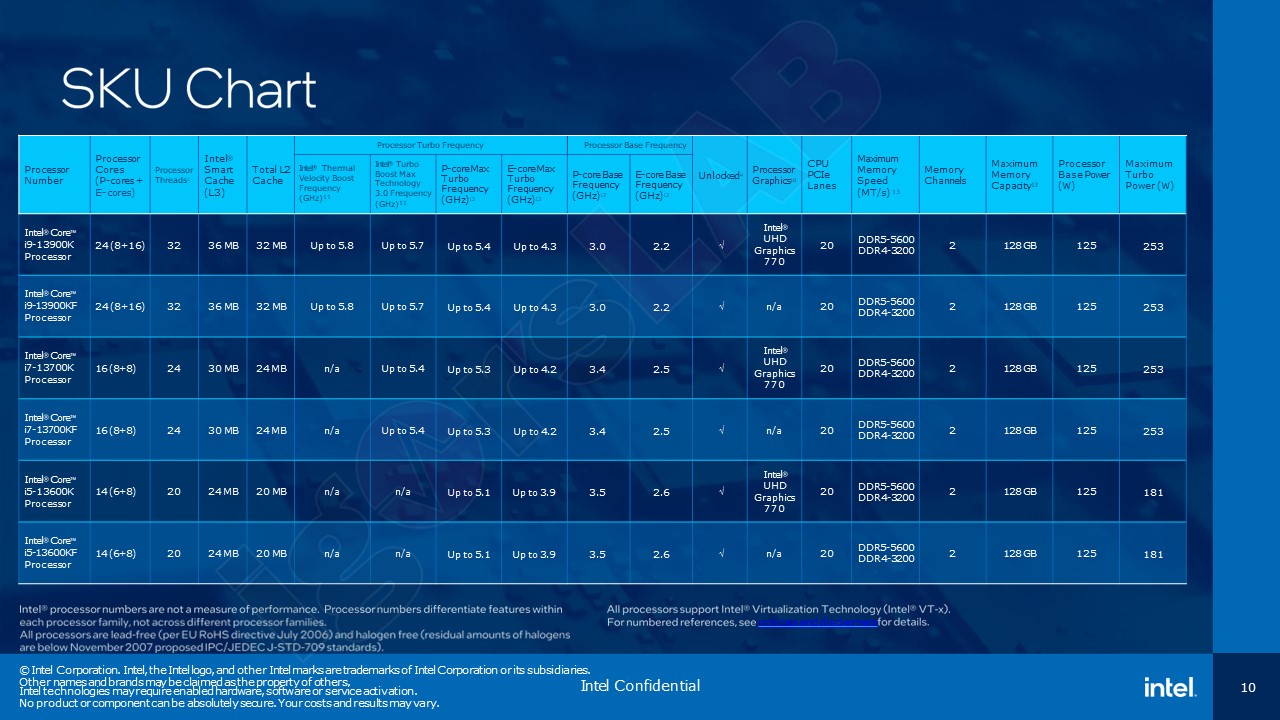

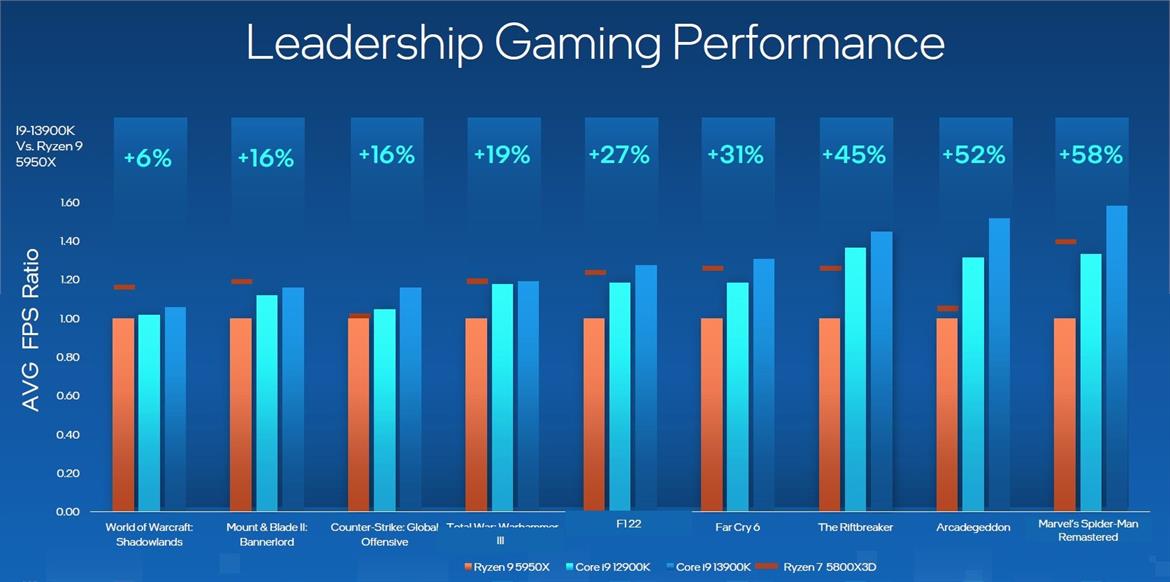

"Intel 13th Gen Core Processors Revealed: Raptor Lake Unleashed" - Screen Grabs from the Event:

13th Gen Explained:

Below, are a few helpful resources about the launch. As always, take performance metrics with a pinch of salt!

"Intel 13th Gen Core Processors Revealed: Raptor Lake Unleashed" - Screen Grabs from the Event:

13th Gen Explained: