Last week my latest PCS laptop arrived - it's the fourth one I've had over the past decade.

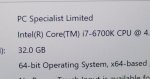

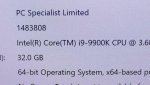

My previous laptop was an Octane III with an i7-6700K and a GeForce 1080. It's served me well, but I fancied the greater performance on offer from today's systems - the i9-9900K (yes, despite the heat) and the clincher, the mobile 2080 which has just become available. I use my laptop for a variety of tasks, from website development, to dabbling with VMs, to gaming. I chose the i9 because of its higher single-core turbo (useful for gaming) and the greater cache (ditto) compared to the i7 range. The hyperthreading also means it'll have a long useful lifespan in theory. I finished off the system with a 1TB Samsung 970 M.2 SSD and a 2TB WD Blue SSD for bulk storage.

Here's a photo of the old and new Octanes side by side - the Octane III has now been sold to my friend (who's a coder and also a gamer). There's very little external difference between them, but why change something that works? The Octane III is on the right, the new Octane VI is on the left.

The main visible difference is in the screen. Both are 4K, both have AUO panels but the model is different. The old one is an AUO B173ZAN01.1 IPS panel, without G-Sync. The new one is the AUO B173ZAN01.0, which *does* have G-Sync. It also has a grainer appearance as the surface coating is rougher - it shows up as a sort of prismatic effect, with whites for example not looking as smooth as they do on the 1.1 panel. I've not seen any mention of this anywhere online, so I suspect not many people get to see both models side by side! There are macro photos below:

It's a fair trade-off, though, and having G-Sync will doubtless be useful. Incidentally, anyone saying 4K on a laptop isn't worth it hasn't used one for any length of time. Even if you set Windows' desktop scaling to 200%, emulating an HD display, the crispness of text and graphics is amazing. The fact the panel has a really good colour gamut is just icing on the cake - it's way better than a generic 72% NTSC TN panel (such as the one I use on my desktop).

Slightly worryingly the panel on the new laptop developed a rectangle (from top to bottom) that was darker than the rest of the screen, very noticable on areas of plain colour. An experimental squeeze at the bottom-left of the bezel (which is where the cabling goes) solved it for now, but I'll keep an eye on it... I suspect the cable is slightly loose. It's under warranty, but I'd rather not have to send it back!

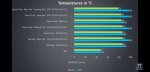

Anyway, onto performance. How do you get an 8-core, hyperthreaded, 95W chip into a laptop? It turns out Clevo have lowered the TDP via the BIOS, albeit you can modify it if you want. Out of the box, it has a 65W TDP with an 83W short power limit. This seems to have no effect on lightly-threaded loads (such as Warcraft, for example), but it does mean that heavily threaded loads will be throttled. No complaints, though, as it turns out the old i7-6700K was similarly throttled in my old Octane. (Intel XTU will give you the details, it's a handy program!)

Gaming wise, no problems whatsoever. Whereas the old laptop would run Warcraft at quality level 7, at 4K, at a steady 60-70fps, the new one runs it at 10, again at 4K, at a similar framerate. The lowest I've seen so far was 59fps, although with G-Sync available it could go a bit lower with no problems. After an email I received codes for BF V and Anthem, so I look forward to seeing those running in due course.

Here are some GPU-Z screenshots, one taken after a WoW session and the other showing the highest temperatures, clocks etc recorded during the session. One was taken via Print Screen, and the other by the in-built tool - that's why they look a bit different!

All in all, it's an impressive upgrade from the old Octane. Clevo have squeezed some high-end components in, but inevitably there are compromises. It seems the majority of the power allocation is given to the GPU, which seems fair, but those who want to tweak can do so to give the CPU priority instead. Even with lowered TDPs compared to stock, for my use it ticks all the boxes: good for programming/compiling, good for database work, good for gaming.

The only slight thing concern for me is the potentially dodgy LCD cable - I'll keep an eye on it. Hopefully that squeeze earlier today will have popped the cable back and it won't be an issue going forward!

My previous laptop was an Octane III with an i7-6700K and a GeForce 1080. It's served me well, but I fancied the greater performance on offer from today's systems - the i9-9900K (yes, despite the heat) and the clincher, the mobile 2080 which has just become available. I use my laptop for a variety of tasks, from website development, to dabbling with VMs, to gaming. I chose the i9 because of its higher single-core turbo (useful for gaming) and the greater cache (ditto) compared to the i7 range. The hyperthreading also means it'll have a long useful lifespan in theory. I finished off the system with a 1TB Samsung 970 M.2 SSD and a 2TB WD Blue SSD for bulk storage.

Here's a photo of the old and new Octanes side by side - the Octane III has now been sold to my friend (who's a coder and also a gamer). There's very little external difference between them, but why change something that works? The Octane III is on the right, the new Octane VI is on the left.

The main visible difference is in the screen. Both are 4K, both have AUO panels but the model is different. The old one is an AUO B173ZAN01.1 IPS panel, without G-Sync. The new one is the AUO B173ZAN01.0, which *does* have G-Sync. It also has a grainer appearance as the surface coating is rougher - it shows up as a sort of prismatic effect, with whites for example not looking as smooth as they do on the 1.1 panel. I've not seen any mention of this anywhere online, so I suspect not many people get to see both models side by side! There are macro photos below:

It's a fair trade-off, though, and having G-Sync will doubtless be useful. Incidentally, anyone saying 4K on a laptop isn't worth it hasn't used one for any length of time. Even if you set Windows' desktop scaling to 200%, emulating an HD display, the crispness of text and graphics is amazing. The fact the panel has a really good colour gamut is just icing on the cake - it's way better than a generic 72% NTSC TN panel (such as the one I use on my desktop).

Slightly worryingly the panel on the new laptop developed a rectangle (from top to bottom) that was darker than the rest of the screen, very noticable on areas of plain colour. An experimental squeeze at the bottom-left of the bezel (which is where the cabling goes) solved it for now, but I'll keep an eye on it... I suspect the cable is slightly loose. It's under warranty, but I'd rather not have to send it back!

Anyway, onto performance. How do you get an 8-core, hyperthreaded, 95W chip into a laptop? It turns out Clevo have lowered the TDP via the BIOS, albeit you can modify it if you want. Out of the box, it has a 65W TDP with an 83W short power limit. This seems to have no effect on lightly-threaded loads (such as Warcraft, for example), but it does mean that heavily threaded loads will be throttled. No complaints, though, as it turns out the old i7-6700K was similarly throttled in my old Octane. (Intel XTU will give you the details, it's a handy program!)

Gaming wise, no problems whatsoever. Whereas the old laptop would run Warcraft at quality level 7, at 4K, at a steady 60-70fps, the new one runs it at 10, again at 4K, at a similar framerate. The lowest I've seen so far was 59fps, although with G-Sync available it could go a bit lower with no problems. After an email I received codes for BF V and Anthem, so I look forward to seeing those running in due course.

Here are some GPU-Z screenshots, one taken after a WoW session and the other showing the highest temperatures, clocks etc recorded during the session. One was taken via Print Screen, and the other by the in-built tool - that's why they look a bit different!

All in all, it's an impressive upgrade from the old Octane. Clevo have squeezed some high-end components in, but inevitably there are compromises. It seems the majority of the power allocation is given to the GPU, which seems fair, but those who want to tweak can do so to give the CPU priority instead. Even with lowered TDPs compared to stock, for my use it ticks all the boxes: good for programming/compiling, good for database work, good for gaming.

The only slight thing concern for me is the potentially dodgy LCD cable - I'll keep an eye on it. Hopefully that squeeze earlier today will have popped the cable back and it won't be an issue going forward!

Last edited: